How Technology is Revolutionizing Healthcare

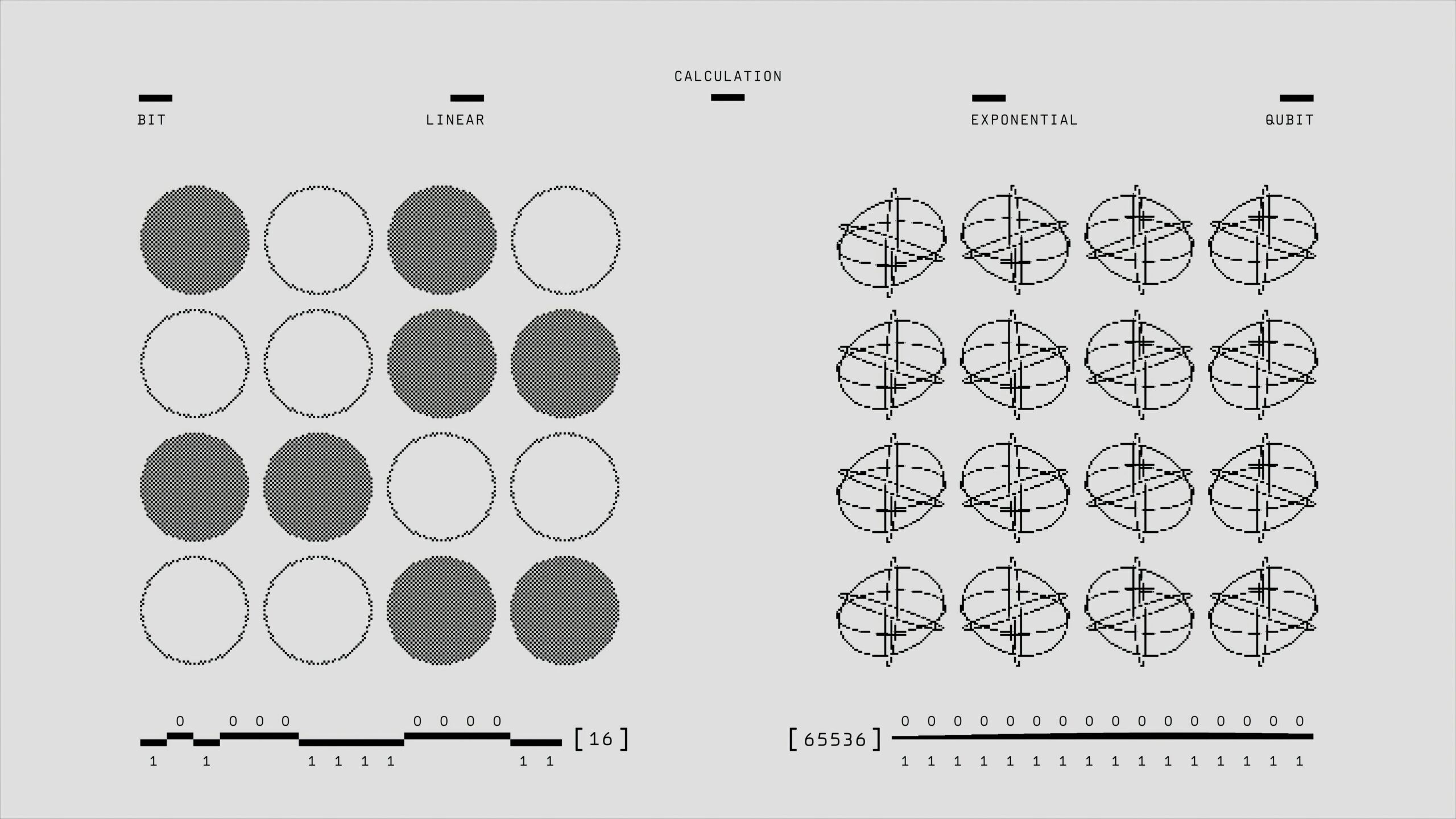

Introduction to Technological Advances in Healthcare The dawn of advanced technology has significantly reshaped numerous industries, and healthcare is no exception. Over the past few decades, technological innovations have profoundly influenced medical practices, transforming healthcare delivery, diagnostics, treatment, and patient care. The integration of cutting-edge technology has not only enhanced the efficiency and accuracy of medical procedures but has also opened new avenues for personalized and preventive medicine. One of the most compelling reasons for the integration of technology in modern healthcare is its potential to improve patient outcomes. By leveraging technologies such as artificial intelligence, machine learning, and big data analytics, healthcare providers can now analyze vast amounts of data more accurately and at an unprecedented speed. This allows for early diagnosis of diseases, precision in treatment plans, and a more comprehensive understanding of patient health trends. Moreover, healthcare technology has brought about significant improvements in telemedicine, enabling remote consultations and care, which has proven especially invaluable in rural and underserved areas. Wearable health devices and mobile health applications empower patients to monitor their health in real-time and engage more actively in their healthcare management. The importance of technology in modern healthcare cannot be overstated. It has paved the way for minimally invasive surgeries, robotic-assisted procedures, and advanced imaging techniques that provide clearer and more detailed views of the human body. These technological advancements ensure that healthcare professionals can perform their duties with greater precision and care while minimizing risks and recovery times for patients. In light of these developments, it is clear that technology is revolutionizing healthcare, bringing about innovations that were once the stuff of science fiction. As we progress further into the digital age, the continuous evolution and integration of technology will undoubtedly remain a cornerstone in enhancing healthcare systems worldwide, ultimately leading to better health outcomes and improved patient experiences. The Rise of Telemedicine Telemedicine refers to the use of telecommunications technology to provide medical care and consultation remotely. This method of healthcare delivery has gained substantial momentum, especially in response to the COVID-19 pandemic, which necessitated physical distancing measures and limitations on face-to-face interactions. Telemedicine’s importance has skyrocketed as it enables healthcare providers to deliver essential services to patients without requiring them to visit medical facilities in person. The benefits of telemedicine are vast and multifaceted. One of the most significant advantages is the improved access to care. For patients residing in rural or underserved areas, telemedicine bridges the gap between them and specialized healthcare providers who may be located several miles away. This not only reduces travel time and associated costs for patients but also ensures timely diagnosis and treatment. Furthermore, telemedicine offers unparalleled convenience for patients. By leveraging video conferences, mobile apps, and other digital tools, patients can consult with their healthcare providers from the comfort of their homes. This convenience is particularly beneficial for individuals with mobility issues, chronic illnesses, or those requiring frequent follow-ups, as it eliminates the need for multiple trips to a clinic or hospital. In terms of cost-effectiveness, telemedicine holds the potential to significantly reduce healthcare expenses. It minimizes overhead costs for medical facilities by reducing the need for physical space and in-person staff, and it also lowers the financial burden on patients by decreasing travel expenses and time off work. Moreover, early detection and continuous monitoring through telemedicine can prevent hospital admissions and emergency room visits, leading to substantial long-term savings. Several platforms have emerged to support the rising demand for telemedicine. For instance, services like Teladoc, Amwell, and Doctor on Demand offer a wide range of virtual healthcare options, including primary care, mental health consultations, and even specialized medical advice. These platforms are designed to be user-friendly and secure, ensuring that patients receive high-quality care while safeguarding their privacy. Artificial Intelligence and Machine Learning in Diagnostics Artificial Intelligence (AI) and machine learning (ML) are profoundly transforming the field of medical diagnostics. These sophisticated technologies have paved the way for significant advancements in disease detection, outcome prediction, and the personalization of treatment plans, contributing to notable improvements in both accuracy and efficiency. AI-powered tools, in particular, are becoming an integral component of modern healthcare practices, offering enhanced diagnostic capabilities that were previously unattainable. One of the remarkable applications of AI and machine learning in diagnostics is in the early detection of diseases. For example, AI-based algorithms can analyze medical imaging data—such as MRI, CT scans, and X-rays—with a level of precision that often surpasses human capabilities. These algorithms identify patterns and anomalies indicative of conditions like cancer, cardiovascular diseases, and neurological disorders at an early stage, leading to timely interventions and better patient prognoses. A notable instance is the use of Google’s DeepMind AI, which has shown high accuracy in diagnosing eye diseases from retinal scans, potentially preventing blindness. Additionally, AI and machine learning are revolutionizing diagnostics through predictive analytics. These technologies leverage vast datasets from electronic health records (EHRs) to predict patient outcomes with remarkable accuracy. For instance, machine learning models can analyze historical patient data to forecast the likelihood of disease progression, hospital readmission rates, and potential complications, empowering healthcare providers to tailor care plans proactively. The use of IBM’s Watson for Oncology exemplifies this, as it assists oncologists in predicting cancer treatment outcomes and suggesting personalized therapy regimens based on a vast corpus of medical literature and clinical data. Moreover, AI and machine learning contribute significantly to the personalization of treatment plans. By integrating genetic information, lifestyle factors, and clinical data, these technologies can formulate individualized treatment strategies that enhance therapeutic efficacy while minimizing adverse effects. Personalized medicine, accentuated by AI insights, is particularly effective in managing chronic conditions such as diabetes, where treatment plans are continually adjusted based on real-time data inputs and predictive assessments. In essence, the adoption of AI and machine learning in diagnostics marks a pivotal shift towards a more precise, efficient, and patient-centered healthcare paradigm. These technologies not only augment the diagnostic process but also enable early detection and personalized treatments, ultimately improving patient outcomes and the