Apple Launches iPhone 16 Pro and Pro Max – The Next Big Leap in Smartphone Technology

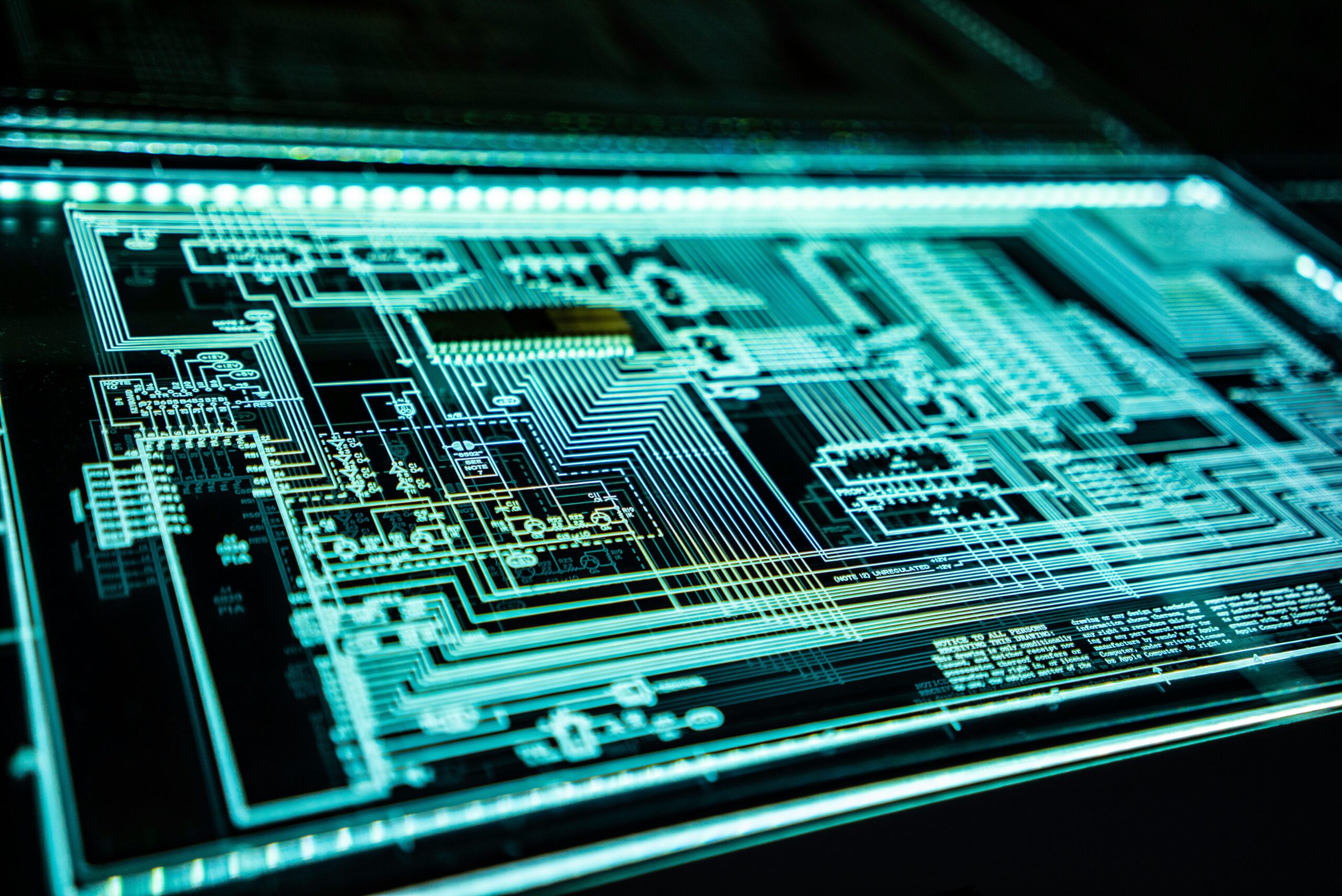

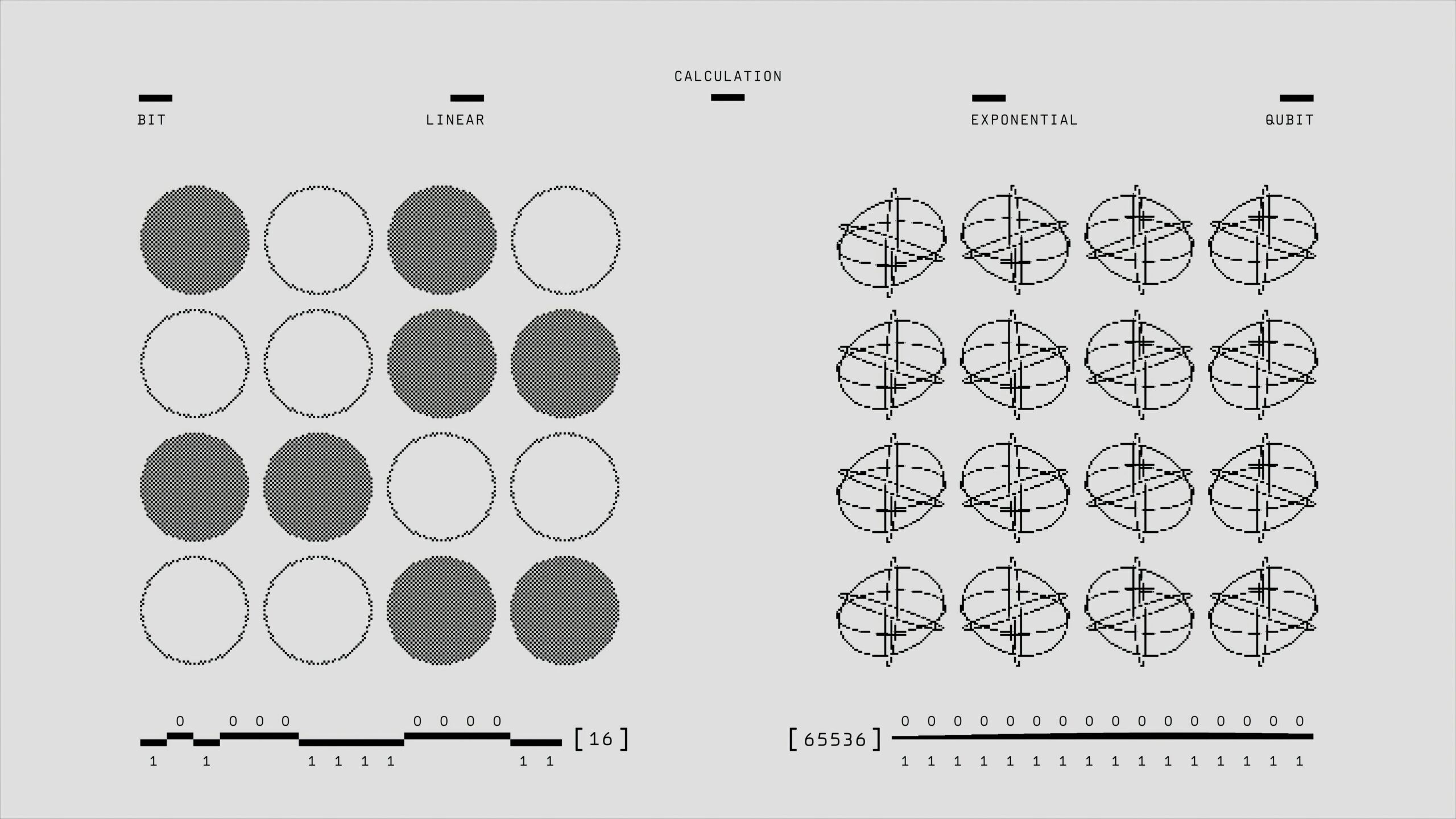

September 2024: Apple has just unveiled its latest flagship devices, the iPhone 16 Pro and iPhone 16 Pro Max, in a highly anticipated event. With cutting-edge features, improved performance, and a sleek design, these new models are already generating buzz among tech enthusiasts. Here’s a breakdown of the key innovations and what to expect from Apple’s newest offerings. Key Features of iPhone 16 Pro and iPhone 16 Pro Max 1. New A18 Bionic Chip Powered by the most advanced A18 Bionic chip, the iPhone 16 Pro and Pro Max promise lightning-fast performance and improved power efficiency, perfect for gaming, multitasking, and augmented reality (AR) experiences. 2. Titanium Alloy Frame Apple has introduced a titanium alloy frame for both Pro models, making them lighter yet more durable than their predecessors. This also adds a premium look and feel to the design. 3.Upgraded Camera System Equipped with a 48 MP main sensor, the new camera system offers groundbreaking photography and videography improvements. Night mode and cinematic video have been enhanced to give users professional-level quality in all lighting conditions. New AI-driven features enhance image stabilization and allow real-time editing. 4. 120Hz ProMotion Display The 120Hz LTPO OLED display makes navigation smoother and enhances visual clarity. Paired with ProMotion technology, users will experience faster refresh rates, which makes everything from scrolling to gaming feel more fluid. 5. Improved Battery Life and Charging Thanks to the A18 chip’s efficiency and a larger battery, the iPhone 16 Pro models promise up to 30 hours of video playback. Fast charging and support for MagSafe 3.0 have been enhanced to make wire-free charging even more convenient. 6. USB-C Finally Arrives Following EU regulations, Apple has shifted to USB-C charging ports on all iPhone 16 models. This will allow faster data transfers and charging speeds compared to the traditional Lightning port. 7. Dynamic Island 2.0 The popular Dynamic Island feature returns with new updates, offering better integration with apps, notifications, and live activities, creating an even more immersive user experience. Price and Availability iPhone 16 Pro: Starting at $1,099 for the 128GB model iPhone 16 Pro Max: Starting at $1,299 for the 128GB model Pre-orders begin on October 1, 2024, with shipping expected to start in mid-October. The iPhones will be available in Silver, Space Black, Gold, and the new Titanium Blue finishes. FAQs: 1. What are the main differences between iPhone 16 Pro and iPhone 16 Pro Max? The primary difference is in the size and battery. The iPhone 16 Pro Max has a larger 6.9-inch display compared to the iPhone 16 Pro’s 6.1-inch display, and it also boasts a bigger battery for extended use. 2. Does the iPhone 16 series support satellite connectivity? Yes, Apple continues to improve on its emergency satellite connectivity feature, now available globally, allowing users to send messages in areas without cellular reception. 3. Is USB-C charging faster than the previous Lightning port? Yes, USB-C enables faster data transfers and quicker charging times, with support for up to 100W fast charging on compatible chargers. 4. How much storage is available for iPhone 16 Pro models? The storage options include 128GB, 256GB, 512GB, and the higher-end 1TB for users who need extra space for photos, videos, and apps. 5. Are there any exclusive features in the Pro Max version? Apart from the larger size and battery, the iPhone 16 Pro Max also includes an enhanced periscope lens for improved zoom capabilities in photography, allowing for up to 10x optical zoom. The iPhone 16 Pro and Pro Max mark another leap forward for Apple, delivering cutting-edge technology in performance, design, and sustainability. From the powerful A18 Bionic chip to the upgraded camera system and eco-friendly initiatives, Apple continues to shape the future of mobile technology. As competition in the smartphone market intensifies, Apple’s latest offerings are set to redefine what users can expect from their devices in 2024 and beyond.